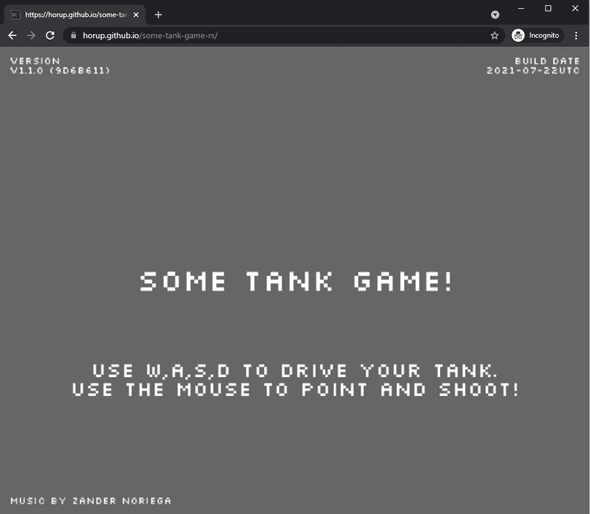

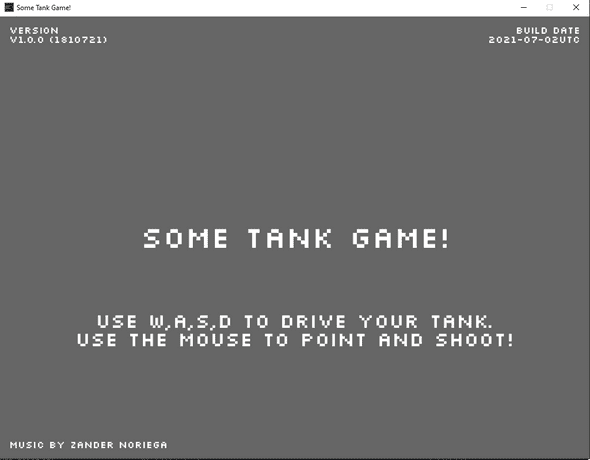

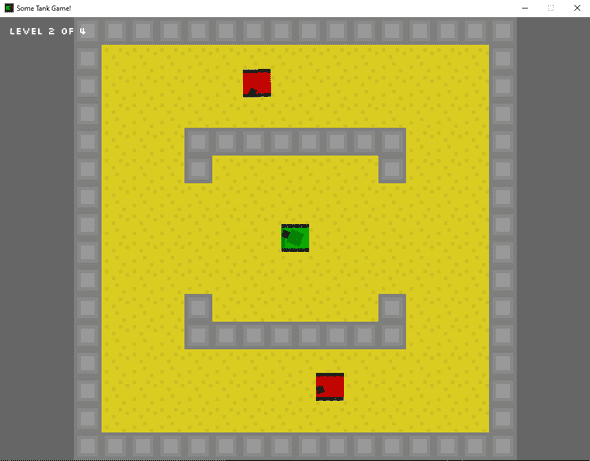

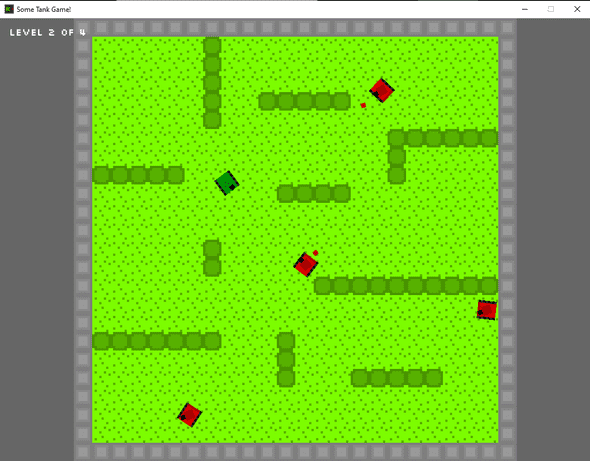

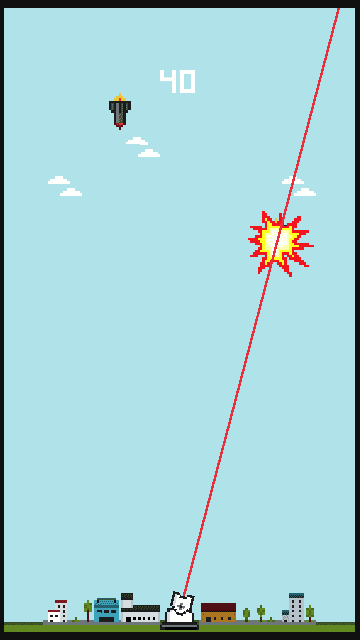

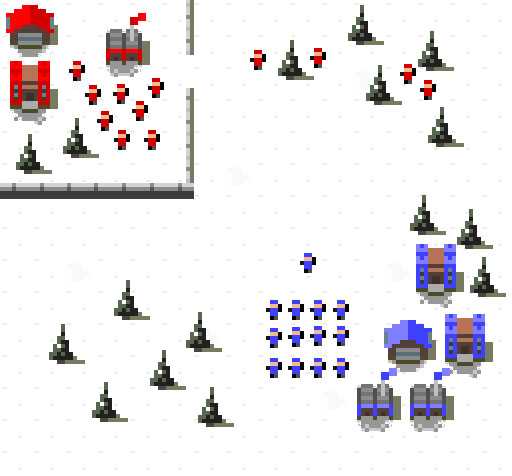

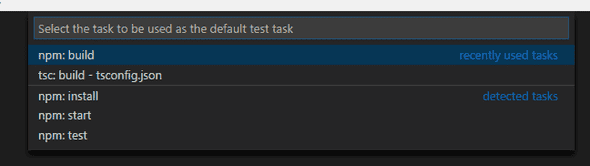

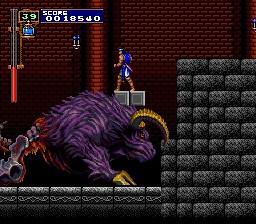

"Some Tank Game" in the browser!

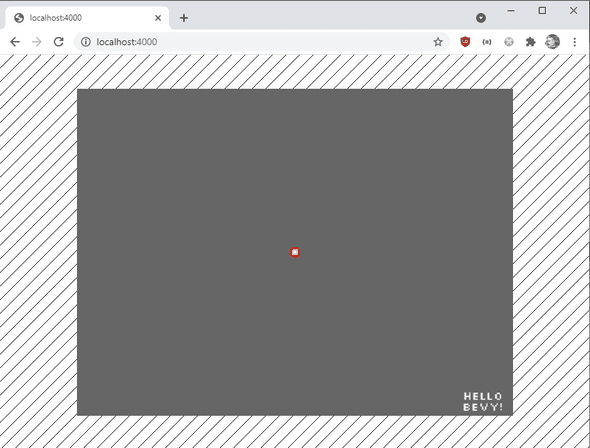

August 05, 2021 - Søren Alsbjerg HørupAfter getting Bevy to run in the browser I started the process of porting Some Tank Game to the browser.

First step was to refactor my main.rs into a lib.rs such that I could use bindgen to generate Javascript bindings for my entrypoint and use the bevy_webgl2 plugin to render in the browser.

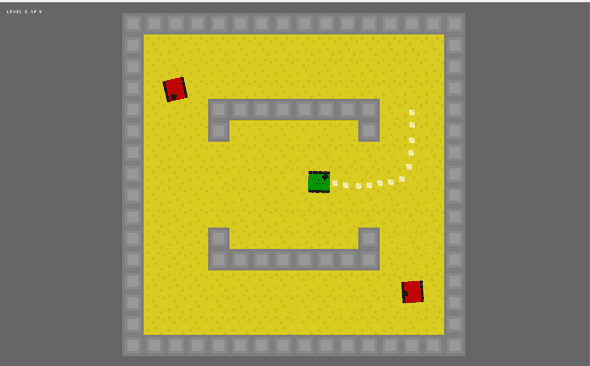

Next step was to get my levels to properly load. For Some Tank Game I use the Tiled editor to create and edit levels. The library I use also works in the browser, BUT! apparently not with external tile sets.

Reason for the lack of external tile sets support seems to be due to the async nature of Ajax requests. First, the .tsx map file is loaded. Next, the referenced external tile sets are loaded. In a native environment this will happen sequentially with blocking I/O and the tiled struct will be returned after all data and dependencies have been loaded. In the browser, only the .tsx file is loaded, subsequent external tile sets are not loaded as part of the .tsx file load due to the async nature of the browser. The open issue on the subject can be found here.

To fix this issue, I opted for the simplest strategy ever - just imbed the tile sets into the .tsx files. Not pretty, but working. A proper fix would be to somehow pre-load the tilesets before loading the maps, such that these are available from the start.

After less than two hours of working, I got my game to successfully run in the browser and made a pipeline in github which builds and deploys the game to Github pages !!!

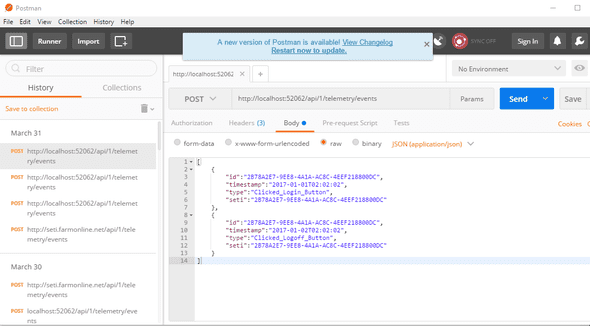

Next step was to improve the experience by adding a spinner when loading the WASM module, preloading of assets when the WASM module was loaded and adding explicit touch support for Tablet support.

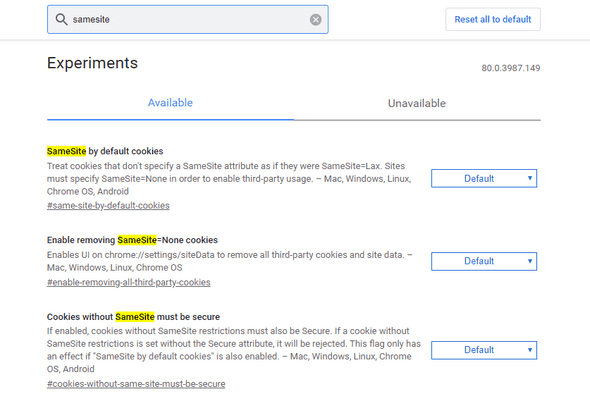

An issue I hit regarding Tablet support was that I could not get my game to reliable load on Android Chrome. Only if I attached the Chrome debugger could I get the game to run - huh?!?!. Firefox on mobile was OK.

After a lot of searching I found that Chrome 91 had a bug related to WASM loading. The Blazor guys were also affected by this bug which were discussed here.

Apparently, the Google guys fixed the issue in Chrome 92, which I successfully verified, but without really know the underlying issue and thus why the bug manifests in Chrome 91 - scary!!!

For the touch support, I had to be somewhat creative since winit, the windowing library bevy use, does not support touch events on mobile browsers. The solution I concocted was to implement touch events in JavaScript, collect the touch data and let Rust ‘pop’ the touch events - and then map these events to my input system.

For the touch support, I opted for a simple single-touch experience where one can drag a path for the tank to follow and then let an ‘autopilot AI’ handle the driving of the tank. Shooting is handled by simply tapping. Seems to work OK.

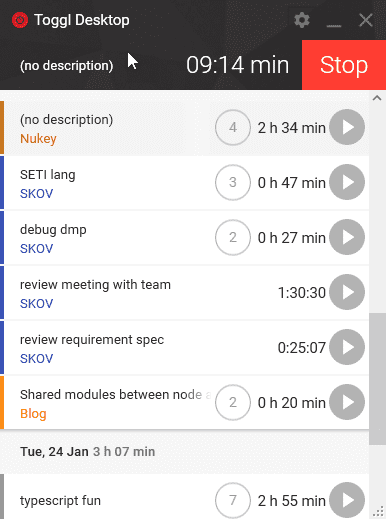

Anyways - took me about 10 hours to port my game to the browser including touch support.

The game can be played here.

Rust is awesome!!!