How to disable the Azure AD password expiration policy through PowerShell

June 22, 2020 - Søren Alsbjerg HørupWe recently encountered a problem with our automatic tests of a cloud solution. The solution utilizes Azure AD as identity provider and currently holds several test user accounts used by our automatic tests.

The tests were green for several weeks, but suddenly turned red due to the password expired! No problem we thought, we simply disable password expiration for the test users in the AD - but after traversing the Azure Portal we did not find the ability to disable or change the password expiration policy (WTF!)

After some Googling, I came to the conclusion that it is not possible to change the policy through the portal but that it is possible through PowerShelling (Is this a term I can use :-P)

Firstly, the AzureAD module must be installed in PowerShell:

Install-Module AzureAD This will populate the PowerShell with Azure specific cmdlets.

Next, the specific subscription needs to be selected:

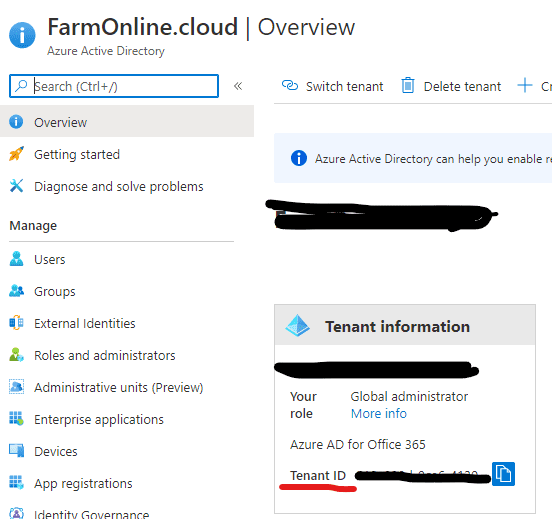

Select-AzureSubscription -TenantId <GUID>The GUID can be found Portal under Tenant ID:

Lastly, the following command gets the test user from the AD and sets the password policy to “DisablePasswordExpiration”:

Get-AzureADUser -ObjectId "testuser@XYZ.onmicrosoft.com") | Set-AzureADUser -PasswordPolicies DisablePasswordExpirationThat’s it! Password should no longer expire for the given user!